Cisco Nexus 1000V Distributed Virtual Switch

Another Nexus 1000V blog post? Yes. However, the focus of my contribution to the subject is bridging the conceptual divide that exists for traditional network engineers, to provide an intuitive understanding of what is going on, and to help them make sense of a product whose setup is radically different from what they have previous dealt with.

For those that want something more, you can look at the following links:

Marketing Overview Video(5 Minutes)

Cisco Nexus 1000V “Data Sheet”

Cisco Community Support for Nexus 1000V

The Familiar

When logged into the NX1KV, let’s first take a look at the “show modules” output:

Mod Ports Module-Type Model Status

--- ----- -------------------------------- ------------------ ------------

1 0 Virtual Supervisor Module Nexus1000V active *

2 0 Virtual Supervisor Module Nexus1000V ha-standby

3 1022 Virtual Ethernet Module NA ok

4 1022 Virtual Ethernet Module NA ok

5 1022 Virtual Ethernet Module NA ok

6 1022 Virtual Ethernet Module NA ok

Mod Sw Hw

--- ------------------ ------------------------------------------------

1 5.2(1)SV3(1.2) 0.0

2 5.2(1)SV3(1.2) 0.0

3 5.2(1)SV3(1.2) VMware ESXi 5.5.0 Releasebuild-2068190 (3.2)

4 5.2(1)SV3(1.2) VMware ESXi 5.5.0 Releasebuild-2068190 (3.2)

5 5.2(1)SV3(1.2) VMware ESXi 5.5.0 Releasebuild-2068190 (3.2)

6 5.2(1)SV3(1.2) VMware ESXi 5.5.0 Releasebuild-2068190 (3.2)

Mod Server-IP Server-UUID Server-Name

--- --------------- ------------------------------------ --------------------

1 10.0.0.1 NA NA

2 10.0.0.1 NA NA

3 10.0.0.11 26594131-5953-0100-0a1a-200000000004 esx-1

4 10.0.0.12 26594131-5953-0100-0a1a-200000000003 esx-2

5 10.0.0.13 26594131-5953-0100-0a1a-200000000040 esx-3

6 10.0.0.14 26594131-5953-0100-0a1a-20000000003f esx-4

* this terminal session

The first block of output looks eerily familiar, right? The first two modules resemble supervisor engines. Modules 3-6 look like line cards… with a LOT of ports (over 1000 each). There’s no “model” for the line cards which is a little strange to see.

The second block of output isn’t that strange either, except that the “hardware” section is empty for our supervisor modules and the line cards say “VMware”. The “Sw” column refers to the Nexus 1000V version running on each module. The “Hw” column refers to the software build of the line card in the corresponding module.

The third block is unique to Nexus 1000V as it refers to the management IP addresses for each component and the server universally unique identifier (UUID) and hostname. This information tells you on which server or appliance in your network the component resides. This block starts giving you the suggestion that we aren’t dealing with a regular sheet metal chassis.

The Land of Oz

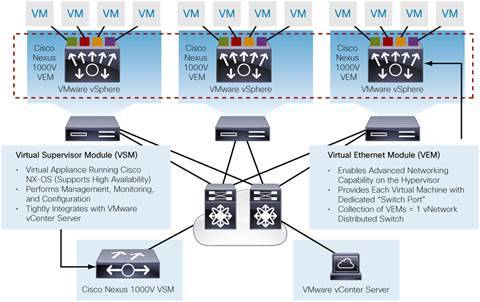

What’s the catch? No suprise to many readers: none of those components are physical hardware. It’s either a virtual machine (VSM) or a package/driver (VEM) in VMware. There are no chassis backplanes for the control or data planes. A functioning network must exist between all the components. To explain any more, we need a drawing. The below figure is found on Cisco’s data sheet page. It is their figure, not mine.

The VEMs (remember, line cards) are software drivers that reside in each VMware vSphere host (aka the hypervisor). The VEMs forward traffic between the LAN and the VMs on the host. But there is very little difference conceptually in how it operates compared to something like a Catalyst 3560-X 48-port switch. In a 3560-X, you have SFP uplinks that connect into the distribution layer configured as VLAN trunks. You have 48 10/100/1000 host ports for clients to connect. The VEMs operate similarly – you have switch “uplinks” (Ethernet ports) but they are the physical VMware server NICs that connect into the physical access layer of your network. You still trunk your VLANs across those uplinks/NICs into your network. The only non-physical element here are your “host ports” (vEthernet ports) – VMware in software connects a VM to a distinct VEM port.

The great part about this technology is that you don’t have to individually manage each and every VEM as a separate switch. This is where the VSM comes in, the supervisor engine of the “distributed virtual switch” (DVS) – the term given to the VSM and all the VEMs associated with it. As in any chassis-based switch, you log into the management interface of the VSM and you see the global switch settings for that (distributed virtual) switch. It is Nexus OS at the core so it’ll behave similarly as a Nexus 5500 or a Nexus 7700 (your usual Cisco model-dependent eccentricities exist, of course). At the CLI, you see all the interfaces in that DVS instance – uplinks for VSM and VEM, client ports that connect to a VM to the network, and which “module” (VMware server) those ports resides on (see the “show modules” output at the beginning of this post).

There are two other components to this unique environment that are different from the physical switch. First, the control plane of this DVS is the physical Ethernet network. Communication between the VSM and VEM use (in the “Layer 2″ style of configuration) two VLANs: control and packet. These can use the same VLAN or distinct VLANs. These VLANs must span across the data center between any VMware host with a VEM and the VSMs (which could be a VM on a VMware host itself).

Second, the VSM has to work very closely with VMware. How else does the DVS know that a VM is connected to a given port? How does VMware know what VLANs (more correctly, port profiles) exist in order to configure a VM? This coordination is established through a “trust relationship” between the VSM and vCenter (the management component of a given VMware environment). These components talk via TCP/IP over port 80 (what your ACLs!) and require a one time setup. Cisco has provided some software (Virtual Switch Update Manager) to help with the VSM installation on VMware and its integration with vCenter to help reduce the barrier to entry.

The Punch Line

Okay, you are starting to feel that this isn’t terribly different from normal life. But, there seems to be a lot of complexity. Why go deploy this technology? Well, I’m not going to give you a sales pitch. Just consider this: in a traditional “core, distribution, access network”, imagine having to troubleshoot access problems for desktop workstations from the upstream distribution only rather than the access switch to which it was directly connected. Doesn’t sound fun, does it? Now, imagine having to do QoS. Or, spanning a port to understand a traffic issue?

Recap

If you’ve gotten here and are thinking, where’s the technical meat? I don’t see any configuration help or guidance. What gives?

The goal of this post is only to help with the concepts and to “de-mystify” the operation of this particular “virtual” switch. I am definitely planning additional posts with some meat. Stay tuned.